Table of contents

- Kubernetes Architecture

- Step 1: Check out the code from GitHub

- Step 2: Create a Dockerfile

- Step 3: Build the Docker image and push to Docker Hub

- Step 4: Set up the master and worker nodes

- Step 5: Install the Kubernetes components on the master node

- Step 6: Install kubectl

- Step 7: Install kubelet on the worker node

- Step 8: Deploy the application

- Conclusion

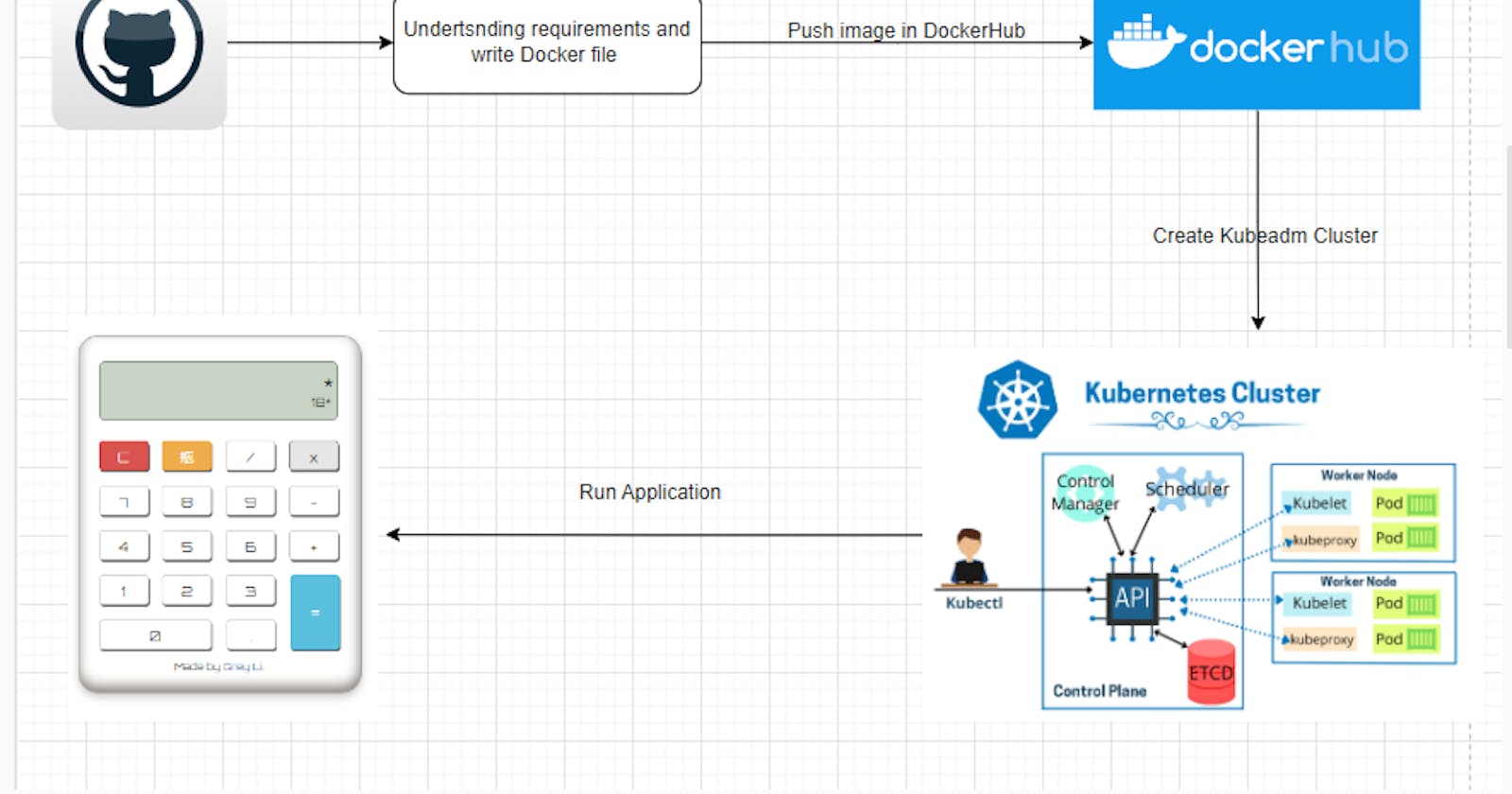

Deploying a calculator application on Kubernetes can be a daunting task if you're not familiar with the process. However, with the right tools and a clear understanding of Kubernetes architecture, it is not as complicated as it seems. In this article, we will walk through the steps to deploy a calculator application on Kubernetes using GitHub for source code management and Docker for deployment.

Before we get started, let's take a moment to familiarize ourselves with some of the key components of Kubernetes architecture.

Kubernetes Architecture

Kubernetes is a container orchestration platform that automates the deployment, scaling, and management of containerized applications. At its core, Kubernetes has a master node and worker nodes. The master node is responsible for managing the cluster, while the worker nodes run the containers.

Here are the key components of a Kubernetes architecture:

API Server: The API server is the central control point for the cluster. It exposes the Kubernetes API, which enables the communication between the Kubernetes control plane and the worker nodes.

Etcd: Etcd is a distributed key-value store that stores the configuration data for the Kubernetes cluster.

Scheduler: The scheduler assigns pods to nodes based on resource availability and constraints.

Kubelet: The kubelet is an agent that runs on each worker node in a Kubernetes cluster. It is responsible for communicating with the Kubernetes API server on the master node to ensure that containers are running as expected. The kubelet performs tasks such as:

Pulling container images from a container registry like Docker Hub

Starting and stopping containers

Monitoring containers for failure

Reporting container status to the Kubernetes API server

The kubelet works in conjunction with other Kubernetes components such as the API server, etcd, and container runtime to manage containerized applications in a Kubernetes cluster.

Kube-proxy: Kube-proxy is a network proxy that runs on each worker node in a Kubernetes cluster. It is responsible for maintaining network rules on the worker node that allow network communication to and from the pods. Kube-proxy performs tasks such as:

Routing traffic to the correct pod based on the IP address and port number

Load balancing traffic between multiple pods

Implementing network policies to control traffic flow between pods

Kube-proxy works closely with the Kubernetes service abstraction, which provides a stable IP address and DNS name for pods running in a Kubernetes cluster.

Control Manager: The control manager is a set of processes that run on the master node in a Kubernetes cluster. It is responsible for the overall management and orchestration of the Kubernetes cluster. The control manager includes several components such as:

Node Controller: Monitors the state of worker nodes and takes action if a node becomes unhealthy or unresponsive.

Replication Controller: Ensures that the desired number of replicas for a pod or a set of pods is running in the cluster.

Endpoint Controller: Populates the endpoint object, which serves as a discovery mechanism for services.

Service Account and Token Controllers: Provide authentication and authorization to pods running in the cluster.

Together, these components work to ensure that the Kubernetes cluster is functioning properly and that containerized applications are running as expected.

In summary, kubelet, kube-proxy, and control manager are key components in Kubernetes architecture. The kubelet ensures that containers are running as expected, the kube-proxy handles network communication to and from the pods, and the control manager is responsible for the overall management and orchestration of the Kubernetes cluster.

- Kubectl:

Kubectl (Kubernetes Control) is a command-line tool used for managing Kubernetes clusters. It is the primary tool used by developers and administrators to interact with Kubernetes clusters and perform various operations, such as deploying applications, managing resources, and troubleshooting issues.

Step 1: Check out the code from GitHub

The first step is to check out the code from GitHub. Make sure to review the code to ensure that it meets your standards and requirements.

Step 2: Create a Dockerfile

Next, create a Dockerfile based on the requirements of your application. The Dockerfile should contain all the necessary instructions to build the image, including the base image, dependencies, and any custom configurations.

Step 3: Build the Docker image and push to Docker Hub

Once you have created the Dockerfile, use it to build the Docker image. After building, push the image to Docker Hub, where it will be available for Kubernetes to pull when we deploy our application.

Step 4: Set up the master and worker nodes

To set up the master and worker nodes, we will use Google Cloud Platform (GCP). Create two servers on GCP, one for the master node and one for the worker node.

Step 5: Install the Kubernetes components on the master node

On the master node, install the Kubernetes components, including the scheduler, etcd, API server, and container manager. These components work together to manage the cluster.

Step 6: Install kubectl

Install kubectl on your local machine, which enables you to communicate with the API server on the master node.

Step 7: Install kubelet on the worker node

Install kubelet on the worker node. The kubelet is responsible for communicating with the API server on the master node and ensuring that the containers are running as expected.

Step 8: Deploy the application

Finally, we can deploy the application. To do this, we need to create a service_manifest.yaml and deployment_manifest.yaml.

Service manifest file:

In a Kubernetes architecture, a service manifest file is a YAML file that is used to define a network service for a set of pods running in a Kubernetes cluster.

A service manifest file specifies the following information:

The name of the service

The labels used to select the pods for the service

The type of service (ClusterIP, NodePort, LoadBalancer, or ExternalName)

The port number and protocol used by the service

Optionally, a set of annotations and selectors that can be used to specify additional properties of the service

When a service manifest file is applied to a Kubernetes cluster, it creates a service object that provides a stable IP address and DNS name for the pods selected by the labels. The service can then be used by other pods or external clients to access the pods in a reliable and consistent manner.

The main benefits of using a service manifest file in Kubernetes are:

Service discovery: Services provide a stable IP address and DNS name for the pods selected by the labels. This enables other pods or external clients to access the pods in a reliable and consistent manner, even if the pods are dynamically created or deleted.

Load balancing: Services distribute incoming network traffic among the pods selected by the labels. This enables the deployment of applications that can handle a large number of requests and can scale horizontally.

Routing and traffic management: Services can be used to route traffic to specific pods based on labels or other properties. This enables the deployment of complex applications that require advanced traffic management capabilities.

In summary, a service manifest file is a YAML file used to define a network service for a set of pods running in a Kubernetes cluster. It provides a stable IP address and DNS name for the pods, enables load balancing and traffic management, and supports the deployment of complex applications.

Deployment manifest file:

In a Kubernetes architecture, a deployment manifest file is a YAML file that is used to define and manage the deployment of a containerized application in a Kubernetes cluster.

The deployment manifest file specifies the desired state of the application and contains information such as:

The name and version of the container image to be used

The number of replicas of the application to be deployed

The configuration settings for the application such as environment variables, command line arguments, and resource limits

The pod template that defines the container specification for the application

After creating the service_manifest.yaml and deployment_manifest.yaml, apply them to the Kubernetes cluster using kubectl. This will create the necessary resources in the cluster and start the application.

Conclusion

In this article, we have walked through the process of deploying a calculator application on Kubernetes using GitHub for source code management and Docker for deployment.

#To access the Docker Image click on DockerHub

#To access the source code, click on GitHub